In the early morning of May 12, Google I / O 2022 Developer Conference (hereinafter referred to as Google I / O) opened today. This conference adopts the form of Online + offline, and returns to the San Francisco coastline amphitheater offline. In the two-hour keynote speech, Google reorganized the progress of its existing AI, computing and data models, and demonstrated the application of key technologies. It also released two new hardware and officially exposed the next three hardware in advance.

Google's two missions and tools to achieve them

At the beginning of the program, the opening video raised a series of questions, such as how to get the most convenient way from point a to point B and how to find a place; Then give the answer "technology makes everyone live better and strive to create the future"

Google CEO Sundar Pichai stepped onto the stage and lamented the beauty of returning to the coastline amphitheater again. The second is to deepen people's understanding of Google's mission, no matter which information he mentioned.

He started with "Google translation", adding 24 translation languages this time, which can break people's communication barriers and enable people to spread knowledge to further places; As for "distance", it is naturally linked to Google maps. The improvement of map data can also be used in remote areas such as Africa. 3D drawing and machine learning + aerial photography make the immersive real map more realistic and complete.

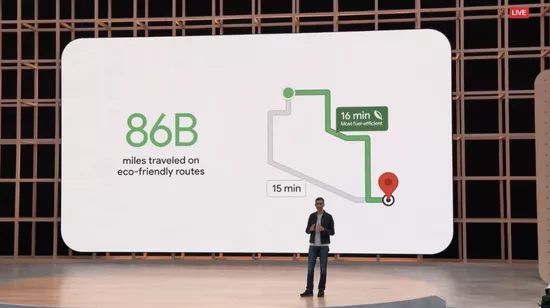

The use of data can even make the map more environmentally friendly: for example, explore some better routes to reduce the fuel consumption and reduce emissions.

As a video platform, YouTube was able to automatically generate chapter segments last year. Now, through voice / video script analysis, we can make this segmentation more accurate, and the number will reach 80 million.

AI can even help people work, such as an ultra long working document. The machine helps you summarize what it says.

In the future, this technology will even introduce Google chat to generate chat summaries or pick up key information from a large number of group chats. Even AI can automatically change the light during video conversation to make the video look clearer.

The future of search

Google's most famous product is the search engine. Before, search was a way similar to query. If you input, it gives a bunch of results; After that, the problem becomes more and more complex. For example, users will upload a picture and ask what kind of bird is this—— This problem is no longer text, but also uses Google image recognition technology.

But this is not the end. Google hopes to "search anywhere in any way" in the future.

"Multiple search" is a work that Google has begun. It uses AI multimodal understanding to understand people's search intention. And embodied in an intuitive way.

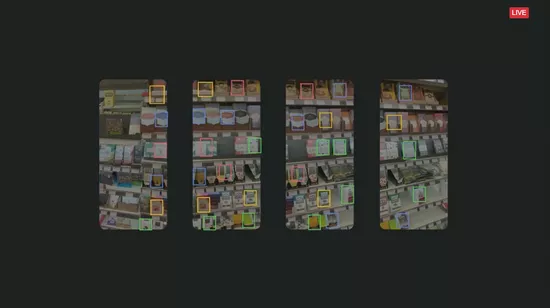

For example, when looking for "nut free dark chocolate" in a chocolate store, users need to scan the shelves with a mobile phone lens. The lens identifies the object - adds screening conditions - and presents the results.

This is a process of image recognition + filtering results. It is still a search, but it is completely different from the previous search engine experience.

The whole experience is very different from our previous understanding of search, which is the future of "search" understood by Google.

Google said that by adding similar technologies and corresponding skin color guidelines, it can build a more inclusive experience, such as matching appropriate makeup or filters for different responsible people, which is politically correct.

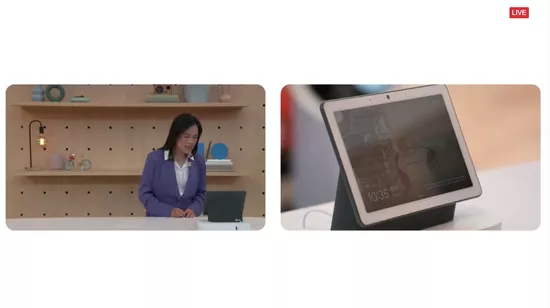

For users, the improvement on consumer products is more direct, such as nest hub Max smart screen product and the new function "look and talk" introduced by Google, that is, just look and say.

The demo clip is "find Santa Monica Beach" - the smart screen gives the corresponding picture - "how to go first" - it gives the route. This process is more like a conversation between people, which is more natural, because the machine will understand what people say "first".

In addition, natural language processing is also more accurate. For example, it is not an accurate requirement to say "find someone's song" to the computer. However, the machine will remove the unclear factors in the middle and give accurate results.

Pichai returned to the stage and talked again about the research progress of "lamda", the language model for dialogue application announced at Google I / O conference last year.

Learning concept plays a vital role in it. Lamda will continue to understand during the dialogue and let the subsequent dialogue go on instead of every interruption. It needs to learn what is being talked about again.

This year's demonstration is "I want to grow vegetables", and the machine gradually gives various instructions.

In fact, this is still a search. Lamda carries out a series of searches behind the scenes to find answers. It's just as if you're really asking an experienced person about the process of growing vegetables.

In addition, Google has introduced a new large-scale language model: palm (pathways language model), a transformer training model with 540 billion parameters.

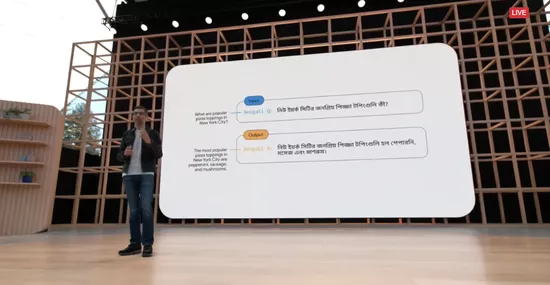

Palm can be trained to use English and multilingual data sets, showing greater ability in many difficult tasks. For example, ask palm in Bengali where the best pizza in New York is. This cross language tricky question shows proficiency in non-English language, coding and arithmetic tasks.

Protective calculation

For most users, the above content is too obscure. Next, there are some things that ordinary users can use, which also shows Google's data protection.

For example, sign in with Google, domestic users may be in Apple I've seen something similar on the device. It's called account opening for short.

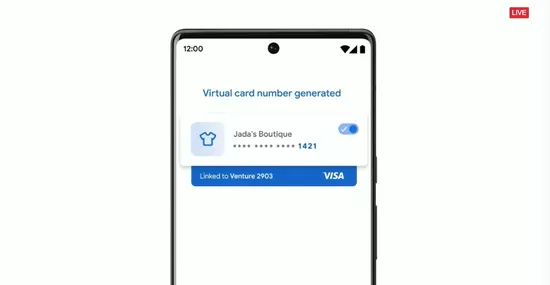

In addition, when Google pay pays, the credit card number will be protected (virtual card number) to prevent theft.

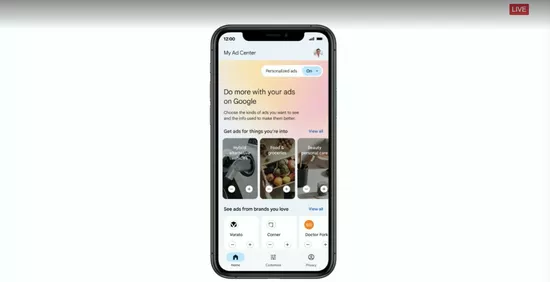

Google's business model is advertising, which was previously criticized for its understanding of user data. Now they promise to protect advertising data, such as not using users' privacy / sexual orientation and other advertising clues. In "my advertising center", users can control some of their privacy.

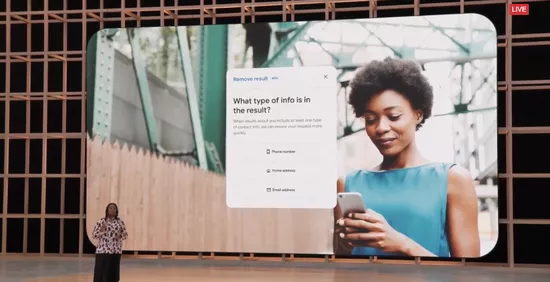

If users find that the search results contain their email, phone, etc., they can click the search results to apply for deletion.

Android 13 update focuses on mediocrity, and Google pays attention to tablet system again

Android 13 is finally here. There are three main themes at the beginning: mobile phone is the center, expand from mobile phone to other products, and (multiple devices) work better together.

After talking about some basic functions such as multilingualism, the focus is on rcsrich communication suite (converged communication). In fact, Google has started to provide RCS chat service to all users with RCS (rich communication suite) installed worldwide through Android messages, and takes it as the default SMS application, similar to Apple's IMessage.

Another improvement is Google wallet, which not only has cards, but also richer contents such as e-tickets, car keys and even vaccination cards.

Wearos of Android 13 and watch also added more security functions, such as calling for emergency help. The live demonstration video is the story of a user who suffered a car accident and was saved because of the emergency function of his mobile phone (well, there are many such stories on Apple).

Wearos also seems to be expanding the ecosystem. The expansion of some third-party apps was mentioned at the meeting.

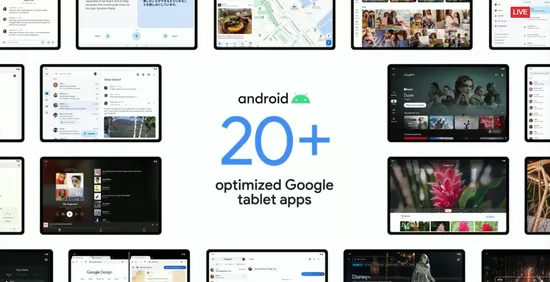

Google has finally begun to pay attention to tablets again. Large screen devices have been further optimized. Xiaomi tablet also recorded a small face at the meeting. Google has also promised to update more than 20 of its apps to make them more efficient on the big screen.

In fact, Google's renewed emphasis on tablets is not only due to the recent resurgence of Android Tablet hardware, but also due to the emergence of folding screens.

After the latter is launched, it is actually a new form of tablet, and manufacturers such as Xiaomi are also trying to promote the tablet ecology. Previously, in the Android camp, the tablet ecosystem has always been a short board, and most third-party apps are mobile apps. The direct method seems to be shoddy.

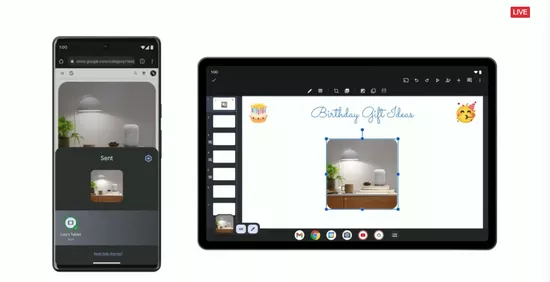

Mobile phones and tablets are also gradually becoming integrated and interconnected: for example, mobile phone drawing and tablet editing.

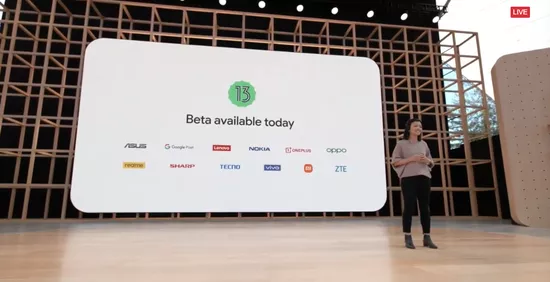

Finally, Android 13 beta will be released today. Note that all the terminal manufacturers on the screen are from China except Google, Nokia and sharp.

Google's own brand hardware release

The "Pro son" series has finally arrived. First, Google pixel 6a, which is the "youth version" of the flagship pixel 6, is still Google's self-developed chip tensor, still supports 5g, and Android 13 system. This means that the core experience is consistent with the flagship pixel 6.

The design is similar, but it doesn't look so high-end (it should be made of plastic). Behind it is a 12 megapixel dual lens system. The price is lower, starting from $449. Pre sale on July 21 and listing on July 28.

Like all previous pixel phones, this phone is the hardware embodiment of various AI technologies and algorithms of Android system and Google just mentioned; Or the body of Google's various technologies.

With the computing power of tensor chip, these effects can be optimized, such as real-time translation function.

Back now, there is a flagship headset release.

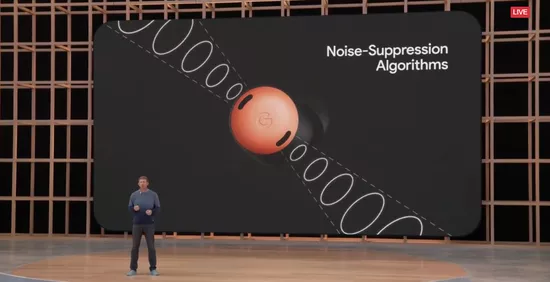

Google pixel buses Pro has a long name. The pixel buses series finally has headphones that support active noise reduction, priced at $199.

The interior is also a set of customized audio chips, with 7 hours of active noise reduction and 11 hours of non noise reduction endurance.

It also supports multi-point connection of Google family bucket, and the switching process is similar to that of apple. There is even Google's version of space audio.

Self exposure three times

So in addition to a cheap phone and premium headphones just released. Today's more eye-catching link is Google's official disclosure of three series of unpublished mobile phones, watches and tablets.

Mobile phones: pixel 7 and pixel 7 Pro. The aluminum alloy shell looks more advanced, and the chip adopts the next generation tensor. But other information was not disclosed.

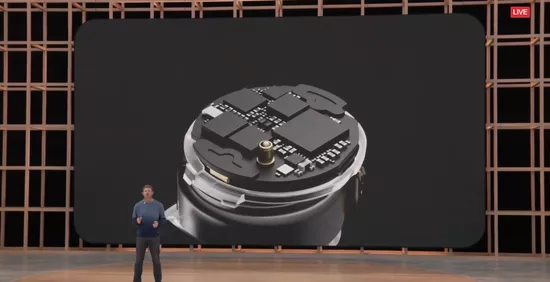

Watch: pixel watch, with more rounded appearance and high-end material. It's a good partner between pixel 7 and pixel 7 Pro.

The watch is also integrated with Fitbit, a smart watch company previously acquired by Google, and brings a series of health functions to its own watch.

The tablet is clearly to be released in 2023, and the corresponding matching has begun in the Google developer community. It looks like a Samsung product, but the back is very round. The three contacts mean that it should be able to connect an external keyboard.

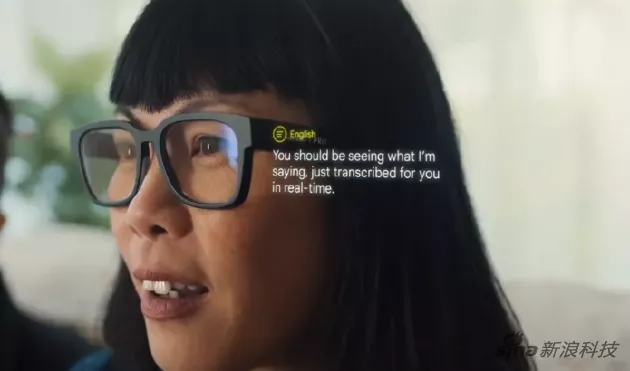

By the way, in the display video, there is something similar to AR glasses. But this looks more like a concept product. Not the official disclosure.

The company didn't share any details about when they would be available, just showing them in a recorded video, not actually showing them or how you would interact with them. But the content shown in the video depicts a very cool picture of the future of potential ar.

Summary:

Like Google I / O in previous years, most of the content of this keynote speech is still obscure and difficult to understand. Except for professionals and technicians, it is difficult for ordinary audiences to understand concepts such as lamda.

But these are just the parts that highlight Google's deep technical strength in the direction of AI. This year, with search as a clue, almost all technologies and achievements have been connected in series, but the demonstration process is slightly dull, and there is no amazing fragment like "Ai calls restaurants to order meals" in previous years.

Different from Google I / O in previous years, this year's hardware is not small, not only the new mobile phones and headphones that can be bought recently, but also the official capricious self disclosure process.

Previously, Google pixel products have often not been released and have been exposed by the outside world. It can be said that there is no secret. This time, the government will do it by itself, and will not let middlemen earn the difference.