Once upon a time, deepfake was particularly rampant on the Internet, and news and adult content became the hardest hit areas of "face changing". Due to the wide audience, there are many ready-made deepfake algorithms on the market for users to use, such as deepfacelab (DFL) and faceswap. Moreover, due to the popularity of deep learning technology, there are also some low-cost or even free online tools that can train these algorithms so that good Samaritans can achieve their goals.

One of the common tools is Google's colab, a free hosted jupyter notebook service. Simply put, users can run complex code on the web interface of colab, and "use" Google's GPU cluster for free, so as to train those in-depth learning projects that rely on high-performance hardware.

But just this month, Google finally gave a killer to colab's online training deepfake

Not long ago, chervonij, the developer of DFL colab project, found that Google added deepfake to the list of prohibited projects in colab in the late part of this month:

Chervonij also said that when he recently tried to run his own code with colab, he encountered the following prompt:

"You are executing prohibited code, which may affect your ability to use colab in the future. Please refer to the prohibited behaviors specifically listed under the FAQ page."

However, the pop-up prompt is only a warning, not completely prohibited, and the user can still continue to execute the code.

Some users found that Google's action this time should be mainly aimed at the DFL algorithm. Considering that DFL is the most commonly used algorithm for deepfake behavior on the network at present. Meanwhile, faceswap, another less popular deepfake algorithm, is lucky. It can still run on colab without prompting.

Matt Tora, CO developer of faceswap, accepted unite AI said in an interview that he did not think that Google's move was for moral purposes:

"Colab is a tool that favors AI education and research. Using it for large-scale deepfake training runs counter to colab's original intention."

He also added that the important purpose of his faceswap project is to educate users about the operation principle of AI and deep learning model through deepfake. This may be the reason why faceswap is not targeted by colab.

"For the purpose of protecting computing resources so that users who really need them can access these resources, I understand Google's move."

Will colab completely prohibit the implementation of deepfake projects in the future? What will be the punishment for users who don't listen? At present, Google has not responded to this change, and there are no answers to these questions for the time being.

However, we can be sure that Google certainly does not want colab, a platform that provides free training resources for public welfare purposes, to be abused by deepfake developers

Google research has opened colab to a large number of users free of charge. The purpose is to reduce the hardware cost threshold of in-depth learning and training, and even make it easy for users with little programming knowledge to get started - that is, the so-called AI popularization of AI.

Due to the outbreak and growth of the blockchain industry and the secondary impact of the epidemic, today's global chips (especially GPUs) are still in a state of power outage to a large extent. Therefore, it is understandable if colab is disabled to run the deepfake project in order to save resources.

However, in addition to deepfake, other behaviors prohibited by colab do include malicious behaviors recognized by the public, such as running hacker attacks, brutally cracking passwords, etc.

Deepfake threshold becomes higher?

For quite a long time in the past, for beginners and middle-level deepfake video creators, if they want to achieve content output with generally acceptable image quality (480p or above), but they do not have enough hardware configuration, then colab is almost the only correct choice.

After all, colab has a simple interface and is easy to use. Its training performance has reached an acceptable level, and it is free. There is no reason not to use it. Some deepfake algorithm projects mentioned above also provide code support for colab.

To discuss deepfake, it is difficult to avoid news face changing videos and adult face changing content. Silicon star people have found that the DFL main project page directly takes the news video face changing as one of the main use scenarios, and some user communities guided in the page also acquiesce in the star or private revenge porn face changing adult content, which makes such content abound.

Now Google has decided to prohibit deepfake projects from running on colab, which is bound to cause a great blow to private deepfake content production .

Because this means that the primary and intermediate deepfake producers will lose one of the most important free tools, and the cost of making such content will increase significantly.

However, according to some insiders in the field, the top professional producers who regard deepfake as a business have basically realized full "independent production"

These people have made a lot of money through illegal sales and member donations, and can invest in more advanced equipment. Now they can produce deepfake videos with higher resolution, sharpness and face restoration, so that they can achieve stable production and revenue without relying on online services such as colab and cloud computing.

For example, if you want to achieve 2K or even 4K resolution and 60fps frame rate, and if the single-chip rendering time is within an acceptable range (for example, a few days), you need a huge rendering farm, at least 10 computers, two NVIDIA RTX high-end display cards supporting SLI technology, and hundreds of GB of memory. In this way, the purchase cost of a single machine is already quite high, not to mention the electricity charge during operation (rendering, cooling, etc.), which can be said to be a considerable investment.

Unfortunately, for this group of people, Google's new policy has no effect on them at all. Only when the whole society pays more attention to the negative impact of deepfake and the whole technology industry takes action can the abuse of deepfake be solved.

Put deepfake in a cage, and all countries and major companies are taking action

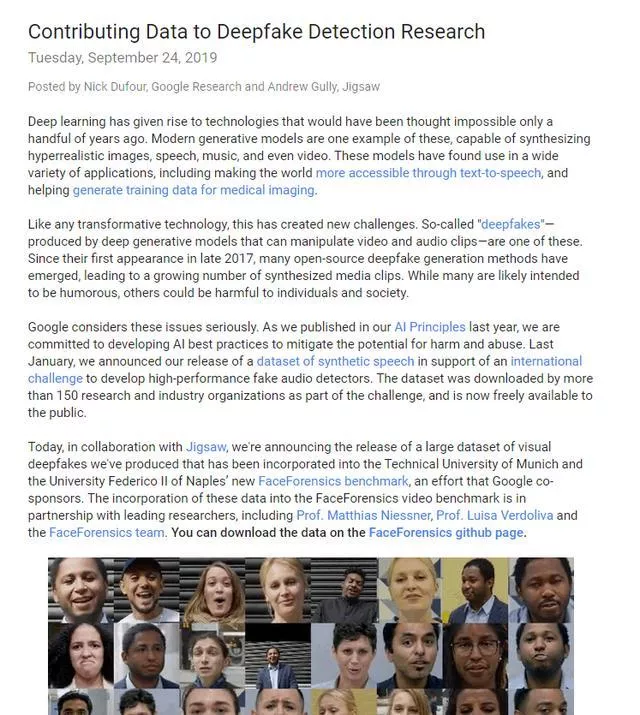

This is really not the first time that Google has come forward to crack down on deepfake content production In 2019, Google research published a large video data set Behind this is Google's attempt to understand the working principle of relevant algorithms by making deepfake videos internally.

For Google, it needs to improve its ability to identify deepfake, so as to cut off the transmission of malicious face changing videos from the source in the commercial product environment (the most typical is YouTube user video uploading). Also, third-party companies can use this data set opened by Google to train deepfake detectors.

However, in recent years, Google research has really not paid much attention to cracking down on deepfake. On the contrary, the company recently launched imagen, an ultra-high fidelity text generated image model, which has achieved amazing results, but has caused some criticism.

Microsoft

Microsoft Research Institute jointly launched a deepfake detection technology called Microsoft video authenticator in 2020* It can detect the abnormal change of gray scale value in the rendering boundary in the picture, analyze the video content in real time frame by frame, * and generate a confidence score.

Microsoft is also cooperating with top media including New York Times, BBC and Canadian Broadcasting Corporation to test the ability of video authenticator in the context of the news industry.

At the same time, Microsoft has also added media content metadata (metadata) verification technology to azure cloud computing platform. In this way, the modified video content can be compared with the metadata of the original video - similar to the MD5 value when downloading files.

Meta

In 2020, Facebook announced a total ban on deepfake videos on the Facebook product platform.

However, this policy was not implemented thoroughly. For example, the famous Chinese copy of musk deepfake video (mainly forwarded from tiktok) can be seen on instagram.

At the industry level, companies and institutions such as meta, Amazon AWS, Microsoft, MIT, UC Berkeley and Oxford University jointly launched a deepfake testing challenge in 2019 to encourage more, better and more up-to-date testing technologies.

Twitter:

In 2020, twitter blocked a number of accounts that often published deepfake videos. However, for other deepfake content, twitter does not completely restrict it. Instead, it will label it "modified media" and provide the detection results of a third-party fact checking agency.

Startups:

Oaro media: a Spanish company that provides a set of tools for diversified digital signature of content, so as to reduce the negative impact on customers caused by the dissemination of modified content such as deepfake.

Sentinel: located in Estonia, it mainly develops deepfake content detection model.

Quantum+integrity: the Swiss company provides a set of SaaS services based on API, which can perform various image-based detection, including real-time deepfake of video conference, screen capture or picture "dolls", false identity documents, etc.

State (legislative and administrative)

China: the implementation outline for the construction of a society ruled by law (2020 - 2025) issued in 2020 further requires that standardized management measures be formulated and improved for the application of new technologies such as deep forgery.

United States: the national defense Approval Law for fiscal year 2020, which was officially signed and came into effect in 2019, contains provisions related to deepfake, which mainly requires the government to notify the legislature of deepfake false information involving transnational, organized and political purposes.

California, New York and Illinois have their own deepfake related laws, the main purpose of which is to protect the rights and interests of deepfake victims.

EU: gdpr, EU artificial intelligence framework proposal, copyright protection framework, false information targeting policy and other high-level legal documents have achieved cross coverage of matters that may be related to deepfake. However, there are currently no laws and policies specifically targeting deepfake at the regional level.

At the level of Member States, the Dutch legislature asked the government to formulate policies to combat deepfake adult video in 2020, and said it would consider incorporating relevant issues into the country's criminal law.