Tesla driver was charged with murder and tried in court, which is the first case in the world. The key point is: when the serious accident that killed two people occurred, the autopilot on the car was turned on . Tesla claimed that the accident of fully automatic driving was not once or twice, but this time the judicial department believed that: it was the driver's fault and had nothing to do with autopilot .

The unprecedented trial, coupled with Musk's personal leadership of the establishment of Tesla Litigation Department at this time, made the dispute more acute:

Is the system provider responsible for accidents related to automatic driving? Is the legal balance more inclined to enterprises?

The car owner was tried for "murder", and Tesla testified in court

The Los Angeles county court charged the owner with: vehicular manslaughter.

There are no similar charges in China, and they have only appeared in the United States in recent years.

By definition, driving homicide refers to

"A crime of causing death due to gross negligence, drunk driving, reckless driving or speeding."

In theory, this is a serious criminal offence because it involves human life.

However, the actual situation is that the punishment is light and heavy. For example, if only slight speeding causes death, it is usually only sentenced to one year's imprisonment at most. However, if the driver is drunk driving or drug driving, the penalty will be much more serious.

In fact, this crime is proposed to replace the crime of manslaughter commonly used in traffic accident judgments in the United States. The legislature believes that the relevant penalty is too heavy and unfair to the defendant in traffic accident cases.

We don't discuss whether the law of the United States is reasonable. However, from the description of this crime, it is necessary to have evidence to prove that the driver has committed gross negligence in the process of driving.

The Los Angeles court said: there is enough evidence that the Tesla driver committed driving homicide.

The trial of this case has just begun, so these evidences are not made public, but they can be analyzed from the accident.

On December 29, 2019, Kevin George Aziz Riad drove Tesla Model s into Gardiner, Los Angeles via high-speed. At this time, he was in a state of overspeed.

Then, the Tesla ran through a red light in downtown Gardiner at a speed of 119 kilometers per hour, and then hit a Honda Civic at the intersection, directly killing two passengers in the Civic.

Two people in Tesla were also injured, but their lives were not in danger.

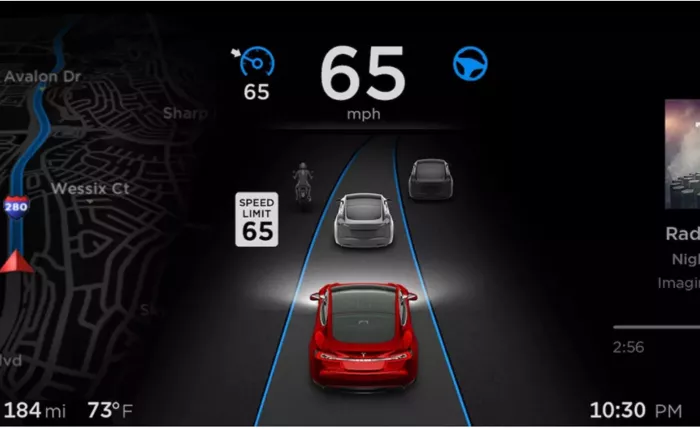

At the time of the accident, the autopilot was on.

According to the capability of autopilot version at the end of 2019, it can not recognize traffic lights and respond independently.

Therefore, the reason for the judicial organ to sue is that the driver did not implement effective supervision on autopilot, which led to the accident.

Tesla also sent engineers to testify in court, saying that the vehicle did turn on autopilot at the time of the incident, and the driver's hands were also on the steering wheel, but the vehicle did not brake or slow down within 6 minutes before the accident.

This is equivalent to the accusation of "negligence" and killing people.

After all, after many accidents, Tesla has changed its language to not say "fully automatic driving", but stressed that whether autopilot or FSD, the driver needs to be ready to take over at any time.

As for why the vehicle does not slow down and runs the red light after coming down from the high speed, and the driver does not brake at one time, it is still a mystery.

According to the analysis of foreign netizens, it may be that the driver turned on NOA at the high-speed stage, but after getting off the high-speed, the system automatically turned to autopilot that can not recognize traffic lights, and the driver was not aware of the change of automatic driving state.

The problem of speed is not surprising. Tesla NOA does not accelerate after getting on the ramp and does not decelerate automatically after getting off the ramp. It has long been roast by domestic and foreign car owners.

This accident may have such a reason.

However, Tesla has given "preventive shots" in advance and now has good reasons to shift the responsibility.

The judicial authorities also believe that the driver's "failure to take good care of" Tesla autopilot is a gross negligence, which is the root cause of the accident. Whether the autopilot technology is mature or not is not directly related to the accident.

Once convicted, Kevin George Aziz Riad will become the first human driver in history to take full responsibility for autonomous driving accidents. This case may also have a significant impact on the development of autonomous driving.

What's the impact of "driver killing"

*Whether the system provider should take responsibility for the accident of automatic driving * is a social problem.

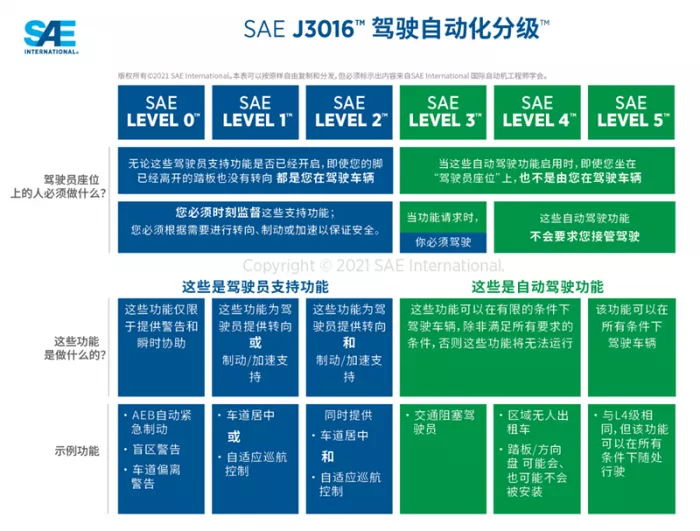

The core reason is that at this stage, L2 + and L3 level automatic driving can not be 100% unmanned and reliable.

L2 + and L3 can realize automatic driving under specific circumstances, and some even allow the driver's line of sight to leave the road. The driver can take over only when the vehicle prompts that it needs to take over.

It can be seen that L3 is in an awkward position, which is fundamentally different from the conventional auxiliary driving level, and can not reach the reliability of L4.

Say it is absolutely reliable, but still can not leave human intervention; It is said that it is unreliable, but the actual L3 related technology has been able to liberate most human labor.

Since 2017, no national regulatory authority has issued clear L3 approval details, which is also because it is difficult to quantify the timing and conditions of artificial takeover due to automatic driving such as Tesla autopilot and FSD.

Therefore, at this stage, all cars above L2 + running on the road are actually in a stage of vague right of way, and there is no basis for the division of rights and responsibilities between human drivers and AI drivers.

This has also resulted in a chaotic way of handling accidents in the past few years.

Europe is conservative and does not allow Tesla or other automatic driving companies to boast of "no one", and the judgment also tends to the safety of users' lives and property.

North America is more radical. Several serious accidents are ultimately attributed to human drivers, such as the famous Uber self driving death case and the current case.

There have also been many similar accidents in China, but domestic manufacturers are more low-key and mainly deal with out of court compensation. However, in the field of low-speed unmanned delivery vehicles, there have been cases with completely different rights and responsibilities, such as the meituan unmanned vehicle accident we introduced before.

No one can give an answer whether the manufacturer is responsible or the user is responsible.

Not long ago, Mercedes Benz said that the manufacturer should take full responsibility for the L3 accident, which brightened the eyes of the industry and users.

But take a closer look. Mercedes Benz has limited the L3 to strict use conditions, such as weather, road section, speed, etc. if one does not meet the requirements, Mercedes Benz can not bear the responsibility. There are few qualified scenes in daily use.

Therefore, Mercedes Benz's commitment to pose for a show is far more meaningful than reality. It does not play any role in promoting technology and law, even if it can cause few meaningful discussions.

But this case is different. If Tesla's car homicide case is a "major crime" in the United States, it will have a major impact on the whole industry.

Whether this step is forward or backward, there are precedents for similar automatic driving accidents in the future.

Users tend to take responsibility. There is no doubt that automatic driving providers will be more open to mass produce their own new technologies, and may be more radical in function and safety guarantee.

Because for companies like Tesla, the money lost in a lawsuit is not enough. The important thing is that once the law decides that it will pay for technical defects, it will damage the confidence of investors and the reputation of the company, and then affect the company's operation and technology iteration.

Judging from the judicial judgment tendency of this case, it is undoubtedly very beneficial to Tesla:

Users are obliged to provide manufacturers with free road test data, and they have to be responsible for accidents.

Some people's image metaphor: the automatic driving competition below L4 is like a track crowded with chasing athletes, but unfortunately, the rules of this competition are not formulated at all, and running fast does not mean winning;

What's more regrettable is that for ordinary people who buy cars to watch the competition, no matter who wins, you may not dare to get in the car and run together.

One more thing

While California's judiciary prosecuted Tesla's driver for driving homicide, Tesla also made new moves here.

Tesla has set up a special department to deal with legal proceedings within the company, including both passive response and active prosecution.

Musk revealed that this new Department is very "hard core" and reports directly to him.

It's really intriguing.

Is it the self-protection policy that was plagued by lawsuits before, or did you see the change of judicial wind direction and think you can get the "gold medal of exemption from death"?