For some people in the AI industry, the ultimate achievement is to create a system with artificial general intelligence (AGI), or the ability to understand and learn any task that human beings can accomplish It has long been considered that AgI has the ability to plan and communicate with people in science fiction, and AgI has the ability to learn science fiction and science fiction for a long time.

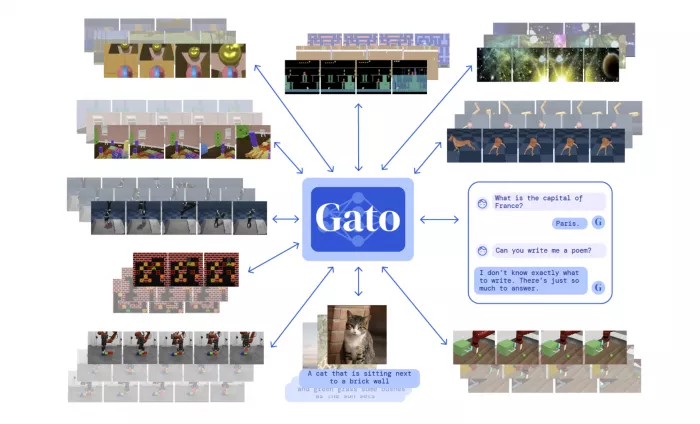

Not every expert believes that AgI is a realistic goal, even a possible goal. Deepmind, a research laboratory supported by alphabet, released an artificial intelligence system called Gato this week, which has contributed to this goal. Gato is a "universal" system described by deepmind, a system that can be taught to perform many different types of tasks. Deepmind researchers trained Gato to complete 604 tasks, specifically, including captioning images, conducting conversations, building blocks with real robot arms, and playing yadali games.

Jack Hessel, a research scientist at the Allen Institute of artificial intelligence, points out that an artificial intelligence system that can solve many tasks is not new. For example, Google recently began to use a system called multi task unified model, or mum, in Google search. It can process text, images and videos to perform tasks ranging from finding cross language changes in word spelling to linking search queries to images. Like all AI systems, Gato captures billions of words, images from the real world and simulated environment, pressed buttons, joint torque and so on in the form of markers through example learning. These tags represent the data in a way that Gato can understand, so that the system can find out the mechanical principle of breakthrough, or which word combination in a sentence may have grammatical meaning.

Gato may not be able to do these tasks well. For example, when chatting with people, the system often responds with superficial or inconsistent answers. For example, when answering what is the capital of France, he said "Marseille". When adding the title to the picture, Gato added the wrong gender. Moreover, when using real-world robots, the system can stack blocks correctly only 60% of the time. But in 450 of the 604 tasks mentioned above, deepmind claimed that Gato performed better than experts in more than half the time. Strangely, from the perspective of architecture, Gato is not significantly different from many AI systems in production today. It has a common feature with gpt-3 of openai, that is, it is a "deformer". Back in 2017, transformer has become the preferred architecture for complex reasoning tasks. It has shown good ability in summarizing files, generating music, classifying objects in images and analyzing protein sequences.

Perhaps more noteworthy is that in terms of the number of parameters, Gato is several orders of magnitude smaller than the single task system including gpt-3. Parameters are the part learned by the system from the training data, which basically defines the skills of the system on a certain problem, such as generating text. Gato has only 1.2 billion, while gpt-3 has more than 170 billion. Deepmind researchers deliberately keep the Gato small so that the system can deal with specific problems in real time.