On May 30, elonmusk, a world-class celebrity, tweeted that 2029 is a key year. I would be surprised if we hadn't realized general artificial intelligence (AGI) at that time. The same goes for people on Mars.

On May 30, world-class celebrity Elon Musk tweeted:

2029 is a crucial year. I would be surprised if we hadn't realized general artificial intelligence (AGI) at that time. The same goes for people on Mars.

Shortly after Musk's launch, Gary F. Marcus, a well-known artificial intelligence scientist and professor of Psychology Department of New York University, immediately wrote a blog post and called for musk to "popularize" the knowledge of general artificial intelligence to musk from five aspects, and put forward the reasons why he thought it impossible to achieve AgI in 2029.

Musk hasn't replied to Gary Marcus' challenge.

Melanie Mitchell, an artificial intelligence expert at the Santa Fe Institute, suggests placing bets on longbets Marcus said that as long as musk was willing to bet, he would gladly keep the appointment.

The following are Gary Marcus' five points of view to refute musk. AI technology review has made the following arrangement without changing its original meaning:

Musk is a "big talk" prophet

First, Musk's prediction of time is always inaccurate.

In 2015, musk said that it was two years before the real autonomous vehicle appeared; Since then, he has said the same thing every year, but the real autonomous vehicle hasn't appeared yet.

Musk doesn't pay attention to the challenge of marginal cases

Secondly, musk should pay more attention to the challenges of marginal situations (i.e. outliers, or unusual situations) and think about what these outliers may mean to the prediction.

Because of the long tail problem, it is easy to think that the AI problem is much simpler than the actual situation. We have a large amount of data about daily affairs, and the current technology is easy to process these data, which will mislead us and give us a wrong impression; For rare events, we get very little data, and the current technology is difficult to deal with these data.

We humans have a large number of incomplete information reasoning skills, which may be able to overcome the long tail problem in life. However, the long tail problem is a very serious problem for the popular artificial intelligence technology that relies more on big data than reasoning.

In 2016, an article entitled "is big data taking us closer to the deep questions in artistic intelligence?" In the interview, Gary Marcus tried to give a warning. That's what he said:

Although there is a lot of hype about artificial intelligence and a lot of money is invested in artificial intelligence, I think this field is developing in the wrong direction. At present, there are many readily available achievements in the specific directions of deep learning and big data. People are very excited about big data and what big data brings them now, but I'm not sure whether it will bring us closer to deeper problems in artificial intelligence, such as how we understand language or how we reason about the world.

…

Think of driverless cars. You will find that driverless cars are great in general. If you put them in a sunny place in Palo Alto, the performance of the vehicle will be very good. But if you put your car in a place where it snows or rains, or where you haven't seen it before, these cars will inevitably have problems. Steven levy wrote an article about Google auto factory, in which he mentioned that the research at the end of 2015 had enabled them to finally make the system recognize leaves.

The system can indeed identify leaves, but it can not obtain so much data for uncommon things. Common sense can be used to communicate between human beings. We can try to figure out what this thing is and how it got there, but what the system can do is memorize things, which is the real limit.

▲ Tesla autopilot crashes into a $3million jet

Unexpected situations have always been a scourge of contemporary artificial intelligence technology, and may continue to be, until the real revolution appears. This is why Marcus has promised that musk will not launch an L5 level autonomous vehicle this year or next year.

Outliers are not completely impossible to solve, but they are still a major problem. So far, there is no known robust solution. Marcus believes that people must get rid of the heavy reliance on existing technologies such as deep learning. There are still seven years to 2029. Seven years is a long time. However, if AgI is to be realized before the end of this decade, this field needs to invest in other ideas. Otherwise, just outliers are enough to fail the goal of AgI.

General artificial intelligence has a wide range

The third thing musk needs to consider is that AgI is a wide-ranging issue, because intelligence itself covers a wide range. Marcus quoted Chaz Firestone and Brian scholl here:

There is not only one way of thinking, because thinking is not a whole. On the contrary, thinking can be divided into parts, and different parts of it operate in different ways: "seeing color" and "planning vacation" operate in different ways, while "planning vacation" is different from "understanding a sentence", "moving limbs", "remembering an event" or "feeling an emotion".

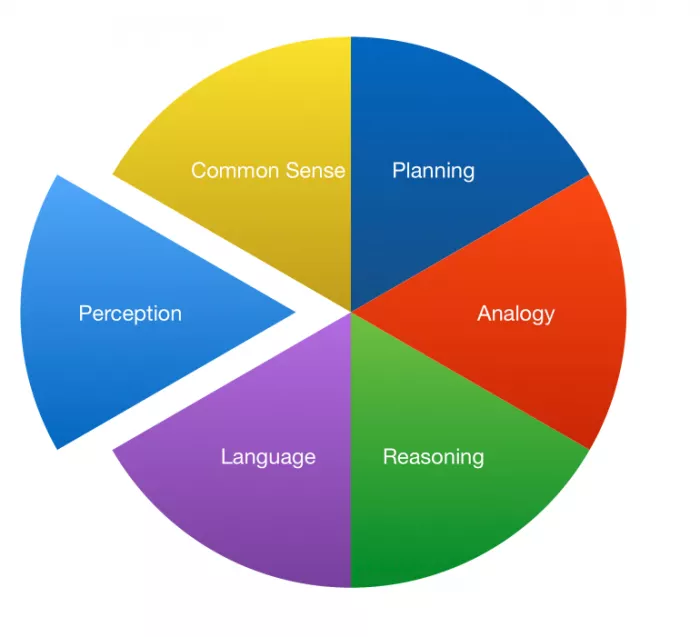

For example, deep learning does quite well in identifying objects, but does less well in planning, reading or language understanding. This situation can be represented by the following figure:

At present, AI is doing well in some aspects of perception, but it still needs efforts in other aspects. Even in perception, 3D perception is still a challenge, and scene understanding has not been solved. There is still no stable or reliable solution for common sense, reasoning, language or analogy. The fact is that this pie chart Marcus has been used for 5 years, but the situation of AI has hardly changed.

In his article "deep learning: a critical appraisal" published in 2018, Marcus concluded:

Despite these questions, I don't think we should give up deep learning.

On the contrary, we need to redefine deep learning: deep learning is not a general-purpose solvent, but a tool. In addition to this tool, we also need hammers, wrenches and pliers, not to mention chisels, drill bits, voltmeters, logic probes and oscilloscopes.

Four years later, many people still hope that in-depth learning can become a panacea; But this is still unrealistic for Marcus. He still believes that human beings need more technology. Realistically, seven years may not be enough to invent these tools (if they don't already exist) or put them into production from the laboratory.

Marcus proposed to musk the situation of "mass production hell" in 2018 (musk thought that the mass production stage of model 3 electric car was like hell, and called it "mass production hell"). In less than a decade, it will be very demanding to integrate a set of technologies that have never been fully integrated before.

Marcus said, "I don't know what musk intends to make Optimus (Tesla's humanoid robot), but I can guarantee that the AgI required by General Motors' household robots is far more than that required by a car. After all, cars are more or less the same whether they are driving on the road or on the road."

Complex cognitive system has not been built yet

The fourth thing musk needs to realize is that human beings still do not have an appropriate methodology to build complex cognitive systems.

Complex cognitive systems have too many moving parts, which usually means that people who make driverless cars and other things end up playing a huge "game of beating hamsters", often just solving one problem and then another. Patch after patch sometimes comes in handy, sometimes it doesn't work. Marcus thinks it is impossible to get AgI without solving the problem of methodology, and he thinks that no one has put forward good suggestions at present.

Debugging with deep learning is very difficult because no one really understands how it works, and no one knows how to fix problems, collect more data, add more layers, and so on. The debugging known to the public is not applicable in the classical programming environment; Because the deep learning system is so unexplainable, people cannot think about what the program is doing in the same way, nor can they expect the usual elimination process. On the contrary, in the deep learning paradigm, there are a lot of trial and error, retraining and retesting, not to mention a lot of data cleaning and data enhancement experiments. A recent Facebook report frankly said that there were many difficulties in training the large language model opt.

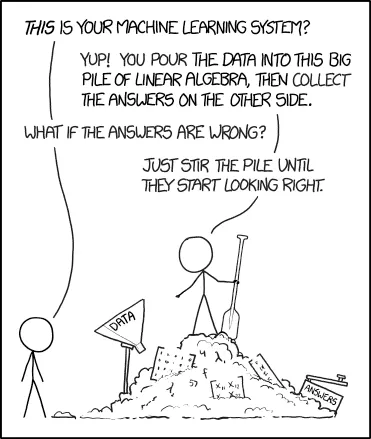

Sometimes this is more like alchemy than science, as shown in the following figure:

▲ "is this your machine learning system?"

"Yes, you pour the data into this pile of linear algebra, and then go to the other side to pick up the answer."

"What if the answer is wrong?"

"Then mix it up in this pile of things until the answer looks right."

Programming validation may ultimately help, but again, there is no tool for writing verifiable code in deep learning. If musk wants to win the bet, they may also have to solve the problem, and it will have to be solved soon.

Bet criteria

The last thing musk needs to consider is the standard of gambling. If you want to bet, you have to make basic rules. The term AgI is rather vague, as Marcus said on twitter a few days ago:

I define AgI as "flexible and versatile intelligence with intelligence and reliability comparable to or superior to human intelligence."

Marcus also proposed to make a bet with musk and formulate specific basic Betting Rules. He and Ernie Davis wrote the following five predictions according to the requirements of the people who cooperated with metaculus:

By 2029, AI will still be unable to tell you exactly what happened while watching the film (Marcus called it "understanding challenge" in the new Yorker magazine in 2014), nor can it answer the questions of who these roles are, what their conflicts and motivations are.

By 2029, AI will still be unable to read novels and accurately answer questions about plots, characters, conflicts, motives, etc.

By 2029, artificial intelligence will still be unable to be a competent cook in any kitchen.

By 2029, AI will still be unable to reliably write more than 10000 lines of bug free code according to natural language specifications or through interaction with non professional users. (gluing together code from existing libraries does not count.)

By 2029, artificial intelligence will still be unable to arbitrarily extract proofs from mathematical literature written in natural language and convert them into symbol forms suitable for symbol verification.

If musk (or others) succeeds in breaking at least three predictions in 2029, he will win; If only oneortwo are broken, AgI cannot be said to be realized. Marcus is the winner.

Marcus was eager to try this bet and asked musk, "do you want to bet? How about $100000?"

What do you think? Who do you think will win?

Reference link:

https://garymarcus.substack.com/p/dear-elon-musk-here-are-five-things?s=w

https://www.ted.com/talks/elon_musk_elon_musk_talks_twitter_tesla_and_how_his_brain_works_live_at_ted2022

https://arxiv.org/abs/1801.00631

https://www.wsj.com/articles/elon-musk-races-to-exit-teslas-production-hell-1530149814