In the face of Google's interests, academic ethics may have to "go a little sideways".

(edited by Du Chen)

According to the exclusive report of the New York Times, Google dismissed an AI researcher in March this year because he had been working against his colleagues for a long time and questioned and criticized the high-profile papers published by the company.

Google AI published a paper a graph placement methodology for fast chip design in June last year, proposing that the ability of using edgegnn reinforcement learning algorithm to design some chip components has exceeded that of human beings. This paper (hereinafter referred to as "chip paper") is published in nature and has great influence in the industry, and Jeff Dean, general director of Google AI, is also one of the authors.

The researcher satrajit Chatterjee had doubts about the chip paper, so he led a team to write a paper (hereinafter referred to as "refutation paper") in an attempt to falsify some important claims in the above-mentioned paper.

However, according to four anonymous Google employees, when the refutation paper had been written, the company first refused to publish it, and then quickly expelled Chatterjee from the company.

"We strictly examined some claims made in the refutation paper and finally determined that it did not meet our publication standards." Zoubin ghahramani, vice president of Google research, told the New York Times.

Chatterjee also seems to have quit his first-line position in AI research and joined a venture capital company (without my confirmation).

|Delisted author, sealed threat: is Google academic so dark

The story goes like this:

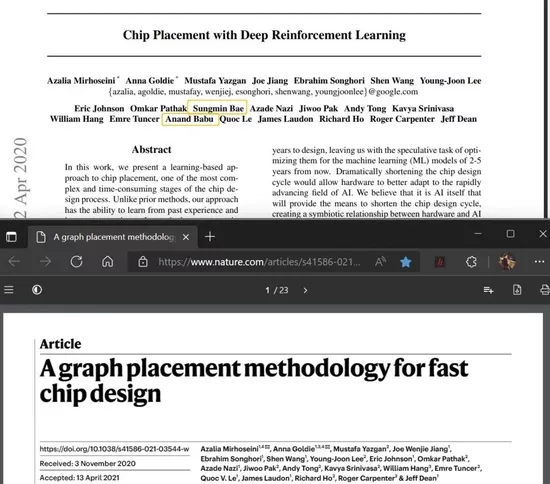

Before the aforementioned chip paper was published in nature, Google published a preprinted paper on chip placement with deep reinforcement learning in April 2020.

According to the situation described by several anonymous insiders quoted by the New York Times, at that time, Google attached great importance to the research direction of AI design chip and was eager to realize the technology it studied as soon as possible.

When this pre printed paper was published, Google went to Chatterjee to ask whether it could sell or license the technology directly to chip design companies.

However, this once worked at Intel Working as a researcher with rich experience in the chip industry, he poured a basin of cold water on Jeff Dean. He emailed colleagues that he had "reservations" about some of the claims in the preprinted paper and questioned that the technology used had not been rigorously tested.

Chatterjee is not the only Google employee on the team who questioned the study. In this pre printed paper, two co authors, Anand Babu, founder of Google AI kernel team, and Sungmin BAE, software engineer, also support Chatterjee's opinion.

Meanwhile, Google can't wait to make money with this paper.

Satrajit Chatterjee photo source: personal website

Google AI readjusted this preprinted paper, changed its title, directly submitted it to the most prestigious journal Nature in the academic world, and successfully published it (i.e. the aforementioned chip paper).

However, according to the people of silicon star, the issue of title change, name deletion and reissue of this paper has caused a lot of controversy within Google AI. Some employees find things very strange:

First of all, why did the paper change its title and send it again?

Secondly, since we want to reissue a version, why hasn't it been reviewed by the company's internal paper review committee?

Finally, it is also the most strange place: Why did the new version of nature get rid of the names of the two authors who expressed different opinions on this study? It means that they have not contributed to the new version, so they directly eliminate the traces of their existence, just like they have never contributed to this research?

Above: pre printed version of this paper in April 2020; Below: the June 2021 edition of nature ("chip paper"), which deletes the names of the two authors. Source: arXiv, nature

In order to settle the dispute, Jeff Dean approved employees including Chatterjee, BAE and Babu to challenge the chip paper, and promised that their subsequent report (i.e. refuting the paper) would follow the process of the paper publication Approval Committee in accordance with the company's established policy.

Before long, Chatterjee and others wrote the refutation paper, entitled "stronger baselines for evaluating deep reinforcement learning in chip placement".

In refuting the paper, the authors put forward several new baselines, that is, the benchmark reference algorithm, which means that the effect worse than this baseline is unacceptable and there is no need to publish the paper.

As a result, the implementation effect of the algorithm proposed by the author is better than that used in the Google chip paper, and the operation depends on much less computing power. The ablation results point out the weakness of the algorithm in the chip paper.

Moreover, the author further points out that the design ability of human chip designers can not be used as a strong baseline, that is, it is very incompetent to compare reinforcement learning algorithms with people in chip papers.

With these findings, Chatterjee and others submitted the refutation paper to Google's paper publication review committee. After waiting for several months, they were finally rejected for publication. Google AI executives responded that the refutation paper did not meet the publication criteria.

The authors even found the company's CEO Sundar Pichai and the board of directors of alphabet, pointing out that the rejection of the paper may be suspected of violating the company's AI research publication and ethical principles.

However, their resistance was soon suppressed. Before long, Chatterjee received notice of his dismissal.

Meanwhile, Anna Goldie, co-author of the chip paper, has a different voice. She told the New York Times that Chatterjee tried to seize power three years ago and has been the victim of the latter's "false information" attack since then.

We don't know what is the direct reason for the dismissal of Chatterjee, who expressed different voices. But silicon star people learned from Google employees that some employees believe that the real reason for Chatterjee's dismissal is to stand on the opposite side of the company's interests and the projects pushed by some core executives of Google AI.

In some people's view, even a large company with a flat structure and fair system like Google will inevitably temporarily change its rules and kick away the dissidents in order to protect the interests of the company and the face of senior executives.

|The employee was dismissed for conflict of interest and protested by "changing his name"

This is really not the first time that Google AI has caught a horse because of different academic opinions and office politics.

Timnit gebru, a member of Stanford AI laboratory and former Google researcher with great influence in the industry, was suddenly dismissed by Google two years ago, which had left a very bad impression on many peers at that time.

And coincidentally, gebru was dismissed from Google for the same reason as Chatterjee: he was opposed to the interests of the company, and the company refused to publish his papers.

(prior notice: timnit gebru is an expert in AI bias in the industry, but she is controversial. Many people think that her "social justice warrior" character is stronger than her fairness as a scholar, which has been questioned by some peers.)

Timnit gebru photo source: Wikipedia commons knowledge sharing license

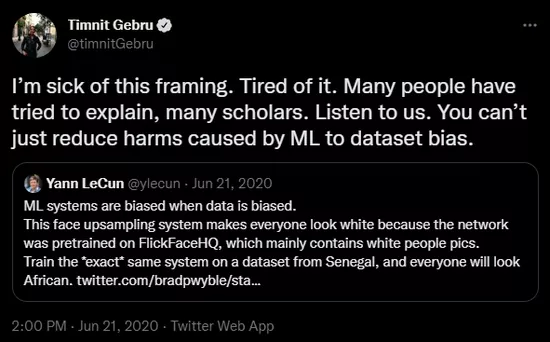

In 2020, gebru launched a confrontation online with Turing Award winner Yann Lecun, one of the "three Godfathers" of AI.

At that time, someone used pulse, a low resolution face restoration model, to restore Obama's photos, resulting in white results. Lecun expressed his views on this, and believed that the inherent deviation of the data set led to the result of AI bias.

This statement has been criticized by many people, including gebru. Gebru said he was disappointed with Lecun's speech because the bias in AI algorithms did not come only from data. She herself has done a lot of research and published some papers in this field. Her view has always been that AI bias does not only come from data sets, but solving data sets alone can not completely solve the problem of AI bias.

Lecun sent more than a dozen tweets to further explain his views, but gebru and her supporters regarded it as "teaching others to teach others" - although Lecun is the "godfather of AI", gebru is an authoritative expert on AI bias.

The curse war between Lecun and critics including gebru lasted for half a month, and the former "pushed back" came to an end.

Gebru bluntly attacked Lecun, a veteran expert in machine learning, on social networks, but it was thought by some senior people within Google that it had damaged the friendly relationship between the company and academia / industry. Although gebru won a phased "victory", she was not fully aware of the seriousness of the matter at that time, and the clouds had covered her head.

You should know how popular large models (represented by language models with large parameters) have been in the field of AI research in recent years, including Google, openai and Microsoft , Amazon, baai and other institutions have invested heavily in this field, and a series of super large-scale language to neural network models and related technologies, including Bert, T5, GPT, switch-c and gshard, have been born.

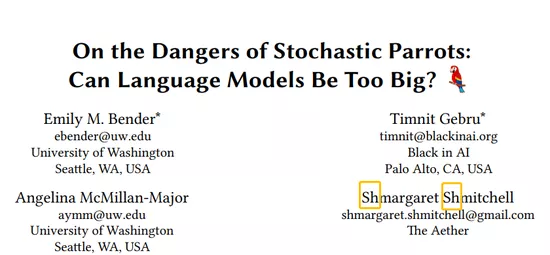

Also in 2020, gebru's team wrote a paper on the hazards of stochastic parrots: can language models be too big? We hope to expose the danger of large-scale language model in practical use and criticize its possible impact on AI bias.

Research in this direction is not small. After all, previous studies have found that large language models such as gpt-2 / 3 will strengthen existing social prejudice and discrimination (including gender and ethnicity) when used in real scenes, causing harm to actual users.

There is nothing wrong with the main views expressed in this article by gebru team. However, in Jeff Dean's opinion, the length is very short, the description and quotation are more than the results based on experiments, and the lack of scientific empirical elements does not constitute the conditions for Google to publish the paper publicly, so it is rejected and not published.

The reason that may be closer to the essence is that if this paper is published, it is tantamount to opposing Google's efforts in large language model in recent years, which will greatly affect morale in the view of Google AI management.

Gebru insists that even if the company does not approve, he should find a way to send the paper. Google asked her to remove the author's Google affiliation in the paper, which means that this article was done privately by several authors and the company does not recognize it. This request was also severely rejected by gebru.

For gebru's departure, Google said she resigned herself (internal employees revealed that gebru did threaten to resign at that time). But gebru revealed that he was fired from the company.

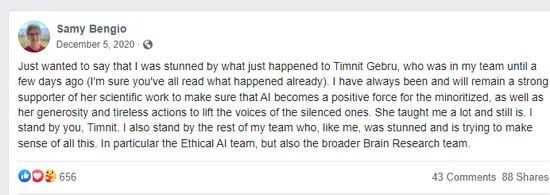

SAMY bengio, who gebru reported to Google, said at the time that he was very shocked. Bengio was honored as an outstanding scientist of Google. He was a 14-year veteran of the company and one of the founding members of the original Google brain team (also the brother of yoshua bengio, one of the three Godfathers of AI). Later, he left Google in 2021 because he was dissatisfied with the dismissal of gebru.

Later, the gebru team's paper was published at the ACM's interdisciplinary conference facct (fair, responsibility and transparency Conference) in March 2021. Only two of the four authors could not appear on the author list as Google employees.

It is worth mentioning that although gebru broke up with Google before the publication of the paper, another author, Margaret Mitchell, still worked for Google at the time of the publication of the article (and was later dismissed).

In the published version of the paper, she "changed her name" and added "sh" before her name to satirize the company's silence:

Source: Wikipedia commons knowledge sharing license

But more outrageous things are still to come.

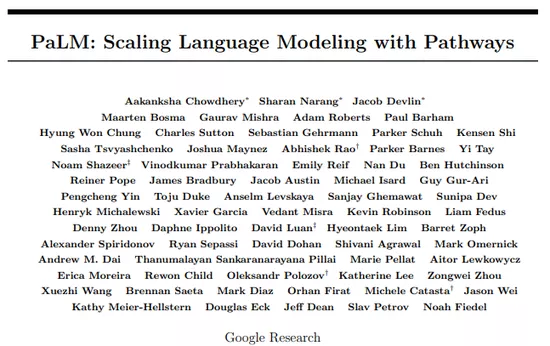

At the beginning of last month, Google AI published another paper, which introduced a new super large-scale language model palm with 540 billion intensive activation parameters developed by the team.

In the part of model architecture and ethical considerations, the palm paper cited the gebru team's paper that Google refused to publish the year before last at least twice.

In the part of moral thinking, the paper wrote that since the feasibility of completely eliminating social bias from training data and models is not high, it is very important to analyze the relevant bias and risks that may appear in the model, and also cited and referred to the analysis method adopted by gebru et al. In the rejected paper.

What's more, Jeff Dean is also the author of the palm paper. This is true. It's very embarrassing.

![above: ironically, Mitchell, a former employee, also left a record of the company in the citation list.] ( https://img.16k.club/techlife/2022-05-05/20a449b7455059721cd3c23a442de01e.webp)

Above: ironically, Mitchell, a former employee, is also left on the citation list.

Gebru said,

"These (Google's) AI bosses can do whatever they want. They don't have to consider that I was fired from the company and my thesis was rated as a failure by the company. They don't have to think about the consequences. I'm afraid they've forgotten what happened in those years."

Finally, many people may want to know: Why are there so many farces in Google AI research department in recent years, and they are all related to the research direction of employees and the conflict of interest of the company?

A former Google employee who knows about Google AI made the following comments on silicon star people:

"While relying on putting satellites to attract more HR and PR attention, we should put the research results of AI into production as soon as possible, and because some controversial projects need to improve the sense of social responsibility. We can't have both fish and bear's paw."

(Note: in the part of "satellite launching", the former employee refers to that some super large model research of Google did not reach the level of state of the art at the time of release. For example, Google's 1.6 trillion parameter switch transformers model has no performance better than similar models with less effective parameters, and the ease of use of API is also very poor, so it is impossible to make an impressive demonstration like gpt-3.)

There is no doubt that Google AI has become a benchmarking organization engaged in basic and applied research of AI in industry technology companies.

Considering that many research results of Google AI can be put into various Google core products faster, and the number of users of these products is hundreds of millions or even billions, it can be said that the research of Google AI is also of great significance to the world.

At the same time, it is undeniable that Google / alphabet is still a profit-making listed company, which needs to be responsible to shareholders and stable and sustainable growth. Today, as a technology that is not new and has a high degree of commercialization and feasibility, the internal expectation of Google for the combination of AI industry, University and research must be increasing.

Considering the above background, it is not difficult to understand why the leaders of research departments such as Jeff Dean are desperate to protect the company's investment and reputation in AI research.

It must be admitted that these bigwigs were originally pioneers in AI academic circles. It is also an insult to say that they do not recognize academic ethics. But unfortunately, in the face of the company's interests, we can only seek their own government in their position. In the face of a great disaster, perhaps academic integrity can only be slightly sidelined for the time being.

Jeff Dean's speech at Google Developer Conference photo source: Du Chen | silicon star man / pinplay