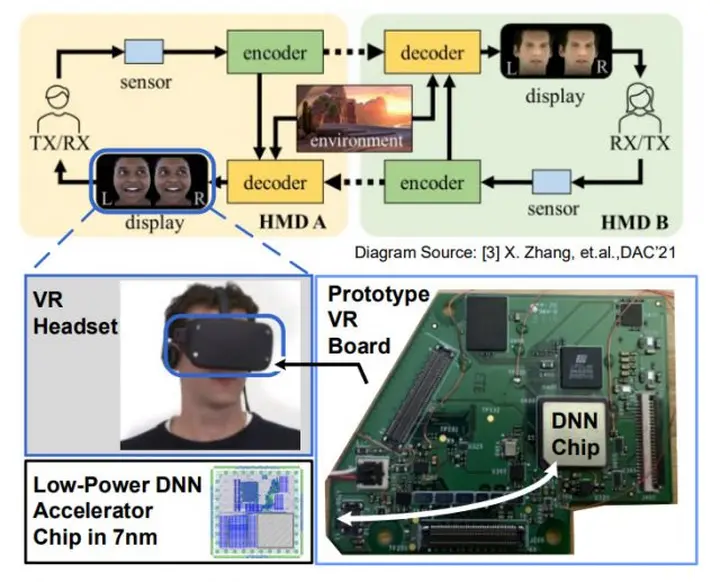

Meta reality labs researchers recently developed a VR head display prototype with a built-in customized accelerator chip specially used to deal with artificial intelligence, making it possible to render the company's realistic codec avatars on a separate helmet.

Before the company changed its name, meta has been promoting the codec avatars project, which aims to make the nearly realistic avatars in VR a reality. Using the combination of sensors on the device (such as eye tracking and mouth tracking) and artificial intelligence processing, the system makes detailed animation for users in real time in a realistic way.

Earlier versions of codec avatars project need to use NVIDIA's Titan x GPU for rendering support, which makes mobile independent head displays such as meta's latest Quest 2 unable to drive these requirements.

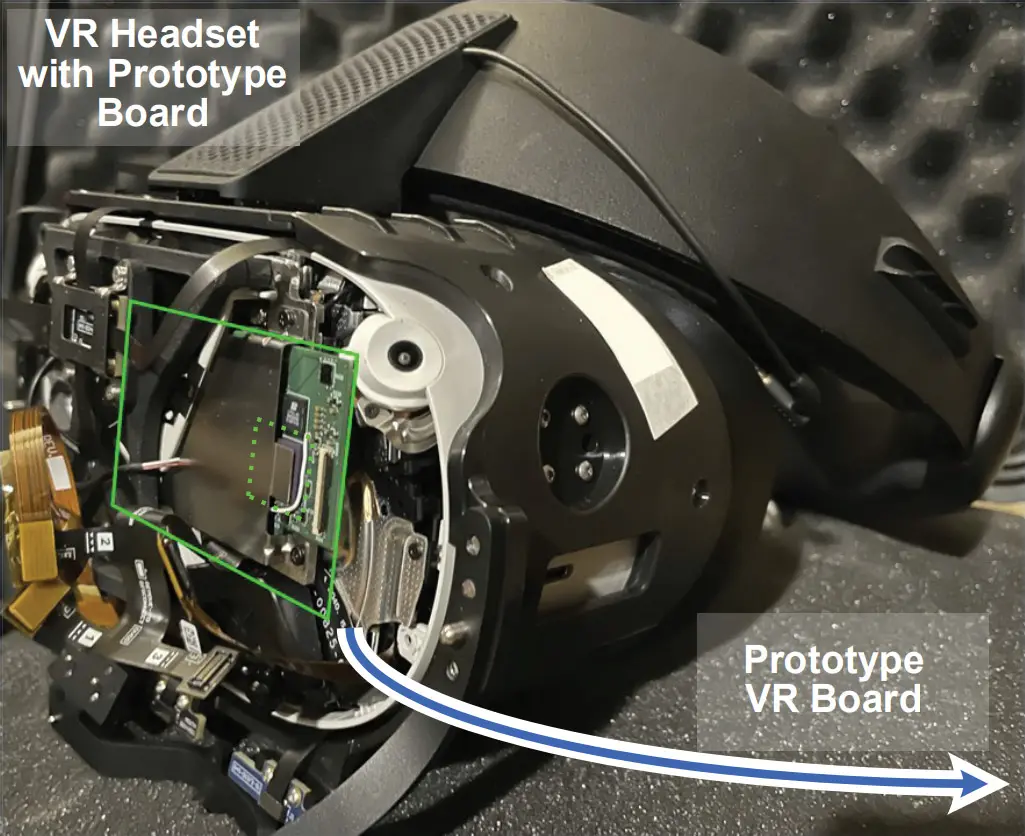

So the company has begun to study how to implement codec avatars on low-power independent head displays, which was proved by a paper published at the 2022 IEEE CICC conference held last month. In this paper, meta revealed that it had created a custom chip made by 7-nm process as an accelerator specially used for codec avatars.

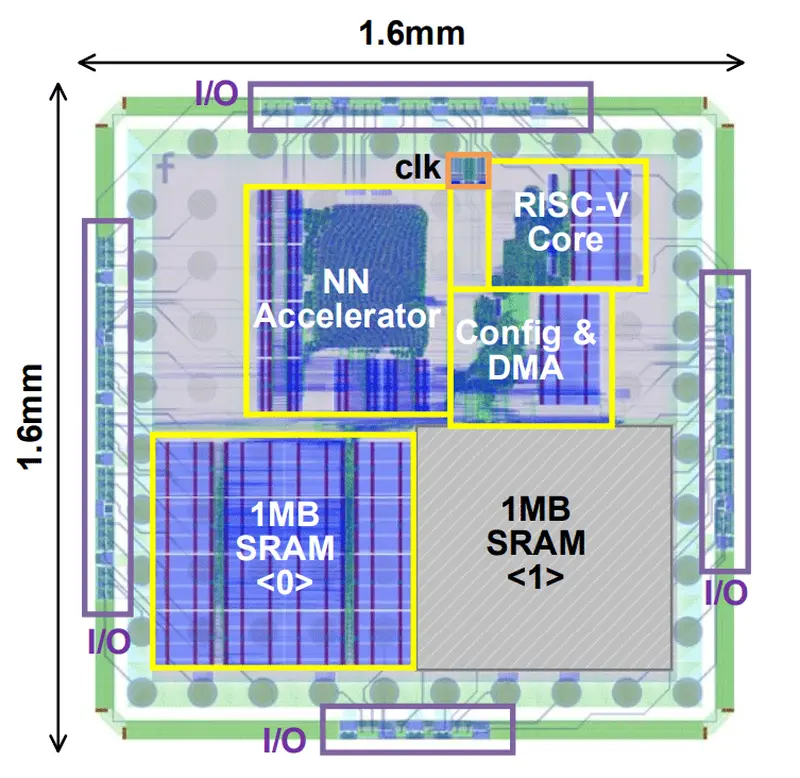

According to the researchers, the chip is far from ready-made. When designing it, the team took into account an important part of the codec avatars processing pipeline, especially analyzing the incoming eye tracking images and generating the data required by the codec avatars model. The chip covers an area of only 1.6mm ²。

"The test chip manufactured at the 7 nanotechnology node has a neural network (NN) accelerator consisting of 1024 product (MAC) arrays, 2MB on-chip SRAM and a 32-bit risc-v CPU," the researchers wrote. In turn, they reconstructed part of the codec avatars AI model to take advantage of the specific architecture of the chip.

"By reconstructing the eye gaze extraction model based on convolution [neural network ] and customizing it for hardware, the whole model is suitable for use on the chip to reduce the delay cost of system level energy and off chip memory access. By effectively accelerating convolution operation at the circuit level, the proposed prototype [chip ] achieves 30 frames per second performance and low power consumption at a low overall size," the researchers said.

By accelerating the dense part of codec avatars workload, the chip not only speeds up the process, but also reduces the power and heat required. Due to the customized design of the chip, it can do this more effectively than the general CPU, and then provide a reference for the software design of the re architecture of codec avatars' eye tracking component.

However, the general CPU of the head display (in this case, the snapdragon XR2 chip of Quest 2) is not idle. When the custom chip processes part of the encoding process of codec avatars, XR2 manages the decoding process and renders the actual visual effects of the avatar.

This work must be quite multidisciplinary, because the paper mentioned 12 researchers from meta's reality laboratory. H. Ekin Sumbul, Tony F. Wu, Yuecheng Li, Syed Shakib Sarwar, William Koven, Eli Murphy-Trotzky, Xingxing Cai, Elnaz Ansari, Daniel H. Morris, Huichu Liu, Doyun Kim, and Edith Beigne。

Impressively, meta's codec avatars can run on separate headphones, even if a special chip is required. But what we don't know is how to deal with the visual rendering of avatars. The user's underlying scan is highly detailed and may be too complex to be fully rendered on Quest 2. It is unclear how much of the "realistic" part of codec avatars is retained in this case, even if all the basic parts are there to drive the animation.