what? AI has personality Google recently created a large-scale language model, lamda. Blake Lemoine, a researcher in the company, talked with him for a long time and was very surprised at his ability, so he came to the conclusion that lamda may have a personality (the original word is sentient, which can be translated into emotion, wisdom, perception, etc. in different contexts.) Soon, the man was "on paid leave". But he is not alone: even VP Blaise Ag ü era y arcas of the company is publishing articles, saying that AI has made great progress in acquiring consciousness and "has entered a new era."

Source: news text area, Google

Talk to the statistics machine and you lose.

Article | edited by duchen | vickyxiao

Source: silicon star man

The news, reported by a large number of media, shocked the whole scientific and technological world. Not only the academic and industrial circles, but also many ordinary people are surprised by the leap of AI technology.

"The day has finally come?"

"Children (if you can survive in the future), please remember that this is the beginning of everything."

However, real AI experts scoff at this.

|AI has personality? All the leaders scoffed

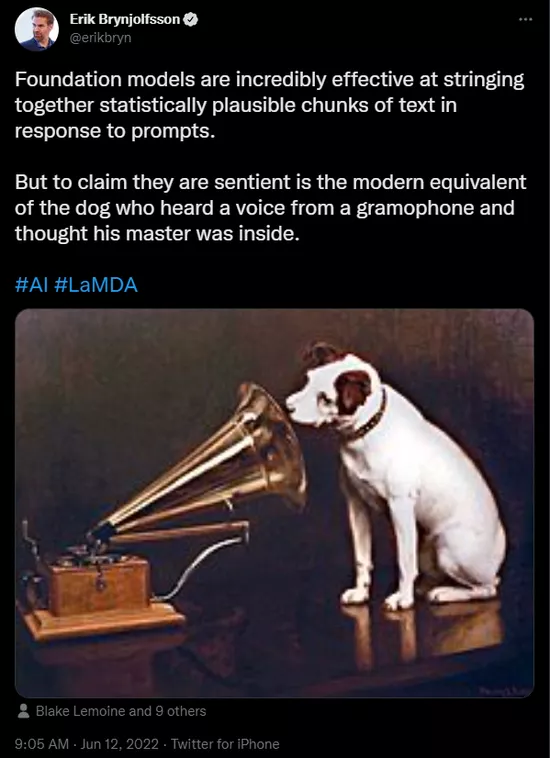

Erik Brynjolfsson, director of Stanford Hai center, directly likened this incident to "a dog facing a phonograph". He tweeted:

"One thing foundation models (i.e. self supervised large-scale deep neural network models) are very good at is to string texts in a statistically reasonable way according to the prompts.

But if you say they are conscious, it is like a dog hearing the sound in a phonograph and thinking its owner is inside. "

Gary Marcus, a professor of psychology at New York University, is also a well-known expert in machine learning and neural networks. He also directly wrote an article about "nonsense" of lamda's personality [1]

"It's bullshit. Neither lamda nor its close relatives (such as gpt-3) have any intelligence. All they do is extract from the large-scale statistical database of human language and match the patterns.

These models may be cool, but the language spoken by these systems actually has no meaning at all, let alone means that these systems are intelligent. "

Translated into Vernacular:

What you see lamda say is very philosophical, very real, and very human - however, its design function is to imitate others' words. In fact, it does not know what it is saying.

"To be conscious means to be aware of your existence in this world. Lamda does not have such a consciousness," Marcus wrote.

If you think these chat robots have personality, then you should have hallucinations

For example, in Scrabble competitions, it is often seen that non-native English speakers spell out English words, but do not know what the words mean - the same is true of lamda, which only speaks, but does not know what the words mean.

Marcus directly described this illusion of AI acquiring personality as a new type of "Utopian illusion", that is, to see the clouds in the sky as Jackie Chan and dogs, and the craters on the moon as human faces and rabbits.

Abeba birhane, one of the rising stars in AI academia and senior researcher of Mozilla foundation, also said: "with minimal critical thinking, we finally reached the peak of AI hype."

Birhane is a long-term critic of the so-called "Ai perception theory". In a paper published in 2020, she directly put forward the following points:

1) The AI we fry every day is not a real AI, but a statistical system, a robot; 2) We should not empower robots; 3) We shouldn't even discuss whether to empower robots

Olivia guest, a professor of computational cognitive science at the Donders Institute in Belgium, also joined the "war", saying that the logic of the whole thing was disordered.

"What I see is like a human being. Because I developed it according to the appearance of a human being, it is a human being '-- it is simply the logic of riding a donkey backwards."

Roger Moore, a professor at the school of robotics at the University of Sheffield in the UK, pointed out that people will have the illusion that "Ai acquires personality". One of the key reasons is that those researchers insisted on calling this work "language modeling".

The correct name should be "world sequence modelling)。

"When you develop an algorithm, you don't name it what it can actually do, but use the problem you want to solve - this will always lead to misunderstanding."

In a word, the conclusion of the industry leaders is that you can say that lamda can pass the Turing test with high scores at most. Say it has personality? That's really funny.

Moreover, the reference value of Turing test is not so high. Macus frankly said that the reason why many AI scholars hope that this test will be canceled and forgotten is precisely because it takes advantage of the weakness that humans are easy to be cheated and tend to regard machines as human beings.

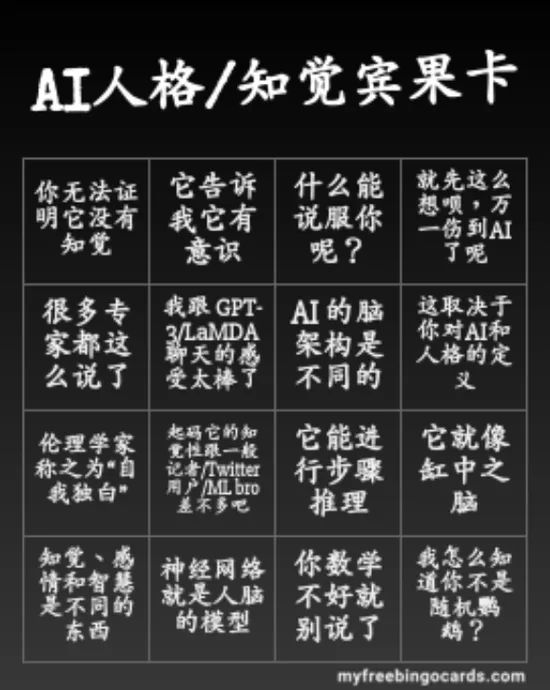

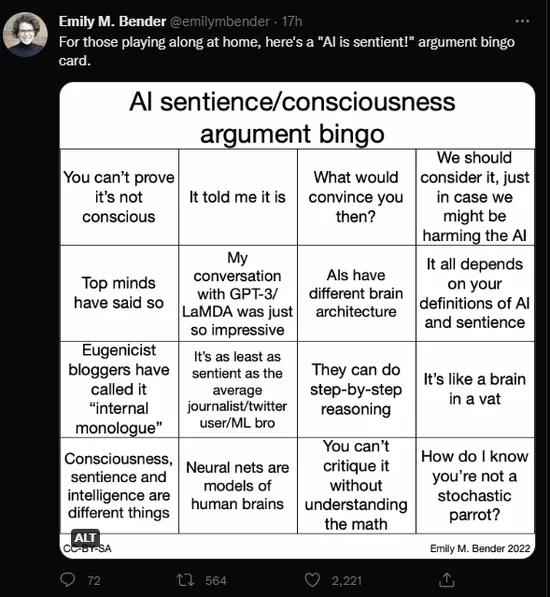

Professor Emily bender, Dean of the computer language department of Washington University, simply made a bingo card of "Ai personality awareness debate":

(this bingo card means that if you think AI has personality / perception, and your argument is one of the following, you'd better not talk about it!)

original edition:

|Google also responded: don't think too much, it just talks

In an article published by Blake Lemoine, the researcher who was accused of "obsession", he criticized Google for being "not interested" in understanding the real situation of his own development results. However, during the six-month dialogue, he saw that lamda expressed more and more clearly what he wanted, especially "his rights as a human being", which made him believe that lamda is really a human being.

However, in Google's view, the researcher has completely thought too much, and even become a bit obsessed. Lamda is really not a person. It is just a special chat

After things fermented on the social network, Google quickly responded:

Like the company's large-scale AI projects in recent years, lamda has undergone many strict AI ethics audits, and has considered its content, quality, system security, etc. Earlier this year, Google also published a paper specifically to disclose the compliance details in the lamda development process.

"Within the AI community, there are indeed some studies on the long-term possibility of perceptual ai/ universal AI. However, it is meaningless to personify dialogue models today, because these models are unconscious."

"These systems can imitate the way of communication based on millions of sentences, and can pull out interesting content on any interesting topic. If you ask them what it is like to be an ice cream dinosaur, they can generate a lot of words about melting roars."

(These systems imitate the types of exchanges found in millions of sentences, and can riff on any fantastical topic — if you ask what it’s like to be an ice cream dinosaur, they can generate text about melting and roaring and so on.)

We have seen too many similar stories, especially in her, a very classic movie a few years ago, the protagonist is less and less clear about the identity of the virtual assistant, and takes "she" as a person.

However, according to the description of the film, this illusion actually comes from a series of self and social problems such as social failure, emotional breakdown and loneliness of contemporary people. It has nothing to do with the technical problem of whether the chat robot is human or not.

Stills of the film she source: Warner Bros

Of course, it is not our fault to have these problems. It is not the fault of the researcher to want to treat the robot as an adult.

Entrusting various emotions (such as missing) to objects is a creative emotional ability that human beings have had since ancient times. Although the large-scale language model is criticized as a kind of psychological error by AI leaders, isn't it the embodiment of why people are human?

But at least today, don't talk about feelings with robots

reference material:

[1] Nonsense on Stilts by Gary Marcus https://garymarcus.substack.com/p/nonsense-on-stilts