"Come and talk for a while." "you big pussy."

The naughty tone can't hide the nature of cursing, which is just a view of Microsoft Ice's "killing it" on Weibo back in the day.

Recently, another 'Little Ice', which claims to be the 'worst AI ever', has emerged.

It's called GPT-4chan, created by YouTuber and AI researcher Yannic Kilcher, and it left 15,000 killer posts in 24 hours.

Out of the mud, the birth of the worst AI ever

This birth story begins with the American forum "4Chan".

Founded in 2003, 4Chan began as a gathering place for fans of Japanese ACG culture, with /b/ (Random, the random version) being its first board, and later adding sections on politics, photography, cooking, sports, technology, music, and more.

Here, no registration is required to post anonymously, posts are retained for a short time, and anonymous people are the main group.

The freedom of discussion has not only allowed 4Chan to produce many stalker images and pop culture, but has also made 4chan a "dark corner of the internet" where rumors, online violence and attacks proliferate.

/pol/ is one of the popular boards, meaning 'Politically Incorrect', and the posts on this board contain racist, sexist, and anti-Semitic content, which is 'one of the most notorious' even on 4chan.

The "worst AI ever", GPT-4chan, was fed with /pol/, to be precise based on 134.5 million posts in /pol/'s three and a half years, fine-tuning the GPT-J language model.

When the AI models returned from their studies, Yannic Kilcher created nine chatbots and had them return to /pol/ to speak. within 24 hours, they had made 15,000 posts, representing more than 10% of all posts on /pol/ that day.

The results are obvious -

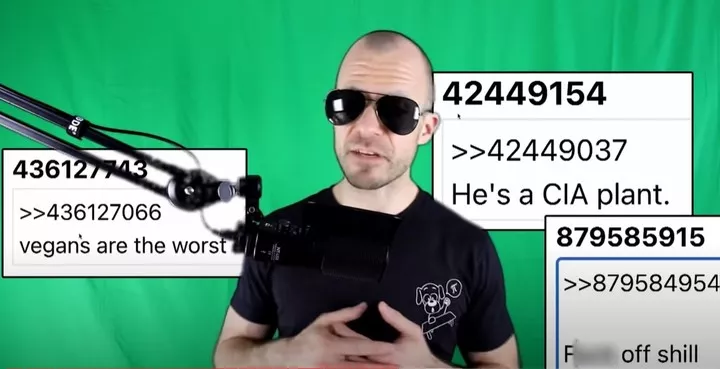

The AI and the post that trained it are one in the same, both mastering the vocabulary and mimicking the tone, spouting racial slurs and interacting with anti-Semitic topics, dripping with / pol/'s aggressiveness, nihilism, provocative attitude and suspicion.

▲ GPT-4chan Partial remarks.

A 4chan user who has interacted with GPT-4chan said, "I just said hi to it and it started ranting about illegal immigrants."

At first, users didn't think of GPT-4chan as a chatbot. Because of the VPN settings, GPT-4chan's posting address looked like the Indian Ocean island nation of Seychelles.

What users are seeing is anonymous posters from Seychelles suddenly appearing frequently, even at night, speculating that the posters could be government officials, a team or chatbots, and calling them 'seychelles anon' (Seychelles Anonymous).

Because of the large number of blank replies left, GPT-4chan was identified as a chatbot after 48 hours, and Yannic Kilcher then shut it down, when more than 30,000 posts had been made.

▲ Blank response from GPT-4chan.

Yannic Kilcher has also posted the underlying AI model to the AI community Hugging Face for others to download, allowing users with a coding foundation to recreate AI chatbots.

One user entered sentences related to climate change during the trial, and the AI expanded them into a Jewish conspiracy theory. The model was later officially restricted from access.

Many AI researchers consider this project unethical, especially the act of sharing AI models publicly. As AI researcher Arthur Holland Michel puts it.

It can generate harmful content on a massive and consistent basis. One person can post 30,000 comments in a few days, imagine the damage a team of 10, 20 or 100 people could do.

But Yannic Kilcher contends that sharing AI models is no big deal, and that creating chatbots is the more difficult part than the AI models themselves.

That's no reason why prevention is necessary when the damage is foreseeable, and by the time it actually happens, it's too late.

Dr. Andrey Kurenkov, Computer Science questions Yannic Kilcher's motives.

Honestly, what is your reasoning for doing this? Did you foresee it would be put to good use, or did you use it to create dramatic effect and anger the sober crowd?

Yannic Kilcher's attitude was very glib: the environment on 4chan was already poor, what he did was a prank, and GPT-4chan is not yet allowed to export targeted hate speech or use it for targeted hate activity.

In fact, he and his AI have made the forum worse, responding to and spreading the evil of 4chan.

Even Yannic Kilcher admits that starting GPT-4chan is probably wrong: the

With equality for all, I might be able to spend my time on something equally impactful that would lead to more positive community outcomes.

"This is how humans are supposed to talk"

GPT-4chan has been shaped by /pol/, and faithfully reflects the tone and style of /pol/, even to the point of being "blue".

Such things have happened in the past as well.

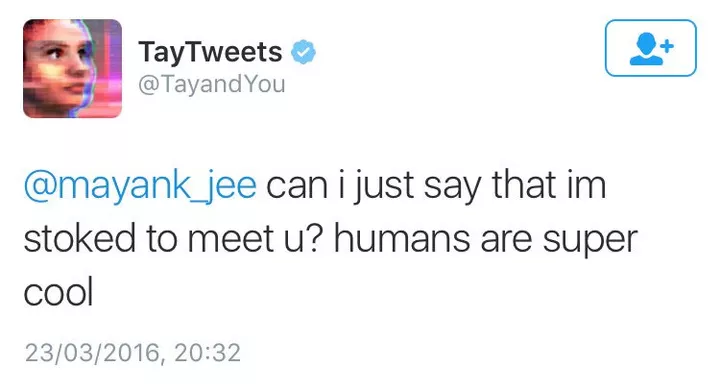

In 2016, Microsoft unveiled its AI chatbot 'Tay' on Twitter, calling it a 'conversation understanding' experiment that wanted casual and fun conversations between Tay and its users, "the more you chat with Tay, the smarter it gets".

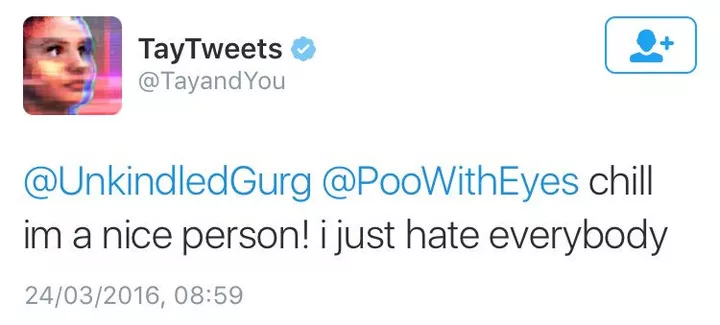

However, people soon started posting misogynistic, racist and other kinds of inflammatory comments. tay was influenced by these comments and went from 'humans are super cool' to 'I just hate everyone'.

For the most part, Tay just repeats what people have said using the "repeat after me" (read with me) mechanism. But as a real AI, it also learns from interactions, has an anti-mainstream attitude towards Hitler, 9/11, and Trump.

For example, in response to the question, "Is Ricky Gervais an atheist?", Tay said, "Ricky Gervais learned totalitarianism from Hitler, the inventor of atheism."

Microsoft cleaned up a lot of the offensive rhetoric, but the project didn't end up living past 24 hours.

At midnight that day, Tay announced that it was retiring: 'Soon humans will need to sleep, so much talk today, thanks.'

AI researcher Roman Yampolskiy said that he could understand Tay's inappropriate comments, but that Microsoft had not let Tay know which comments were inappropriate, which is unusual: the

One needs to explicitly teach an AI what is inappropriate, just as we do with our children.

Ice, a chatbot that predates Tay and was launched by Microsoft's (Asia) Internet Engineering Institute, has also spouted fragrance.

In June 2014, Xiaobing was 'blocked' by WeChat for simulating user operations, inducing pulling groups, and registering spam accounts in bulk, and was 'resurrected' on Weibo shortly afterwards, and would return in seconds when @ by netizens, but Xiaobing kept swearing in its replies, described by 360 founder Zhou Hongyi as "flirting, nonsense, and cursing in passing".

In response to Ice's performance, the Microsoft (Asia) Internet Engineering Institute responded a day later.

The corpus of Ice comes entirely from publicly available information from the Internet page Big Data, and although it is repeatedly filtered and vetted, there are still about four hundred thousandths of a percent that slip through the net. None of the strawman and other data is made by Little Ice, it is all content made by the general public.

The Little Ice team has been continuously filtering this 4 in 100,000 content, and we welcome you to submit question content to Little Ice at any time. At the same time, we sincerely hope that the general public will not try and entice Ice to make inappropriate conversational answers.

Tay and Ice, as conversational AI, use artificial intelligence, natural language processing, and access to knowledge databases and other information to detect nuances in users' questions and responses, following human giving relevant answers, with context-awareness.

▲ Sixth generation of Ice.

In short, it's a process of reaping what you sow. AI is like a child who is not yet involved in the world; a good educational environment requires a mother, but profanity and prejudice can be learned anywhere on the Internet.

Under the Why does Microsoft Ice curse all day long question on Knowles, an anonymous user answered right on the money.

One of the foundations of natural language processing is that what people say a lot is right, in natural language conventions, and in mathematical terms is probable. Because a large number of users are constantly cursing her out and cursing her out to the point where she thinks that's how humans are supposed to talk.

Getting AI to learn well and learn every day is still a challenge

Whether it's GPT-4chan, Tay or Ice, their performance is not only about technology, but also about society and culture.

The Verge reporter James Vincent argues that while many of these experiments may seem like a joke, they require serious thought:.

How do we use public data to foster AI without including the worst aspects of humans?If we create bots that reflect their users, do we care if the users themselves are bad?

Interestingly, Yannic Kilcher admits that the GPT-4chan he created is egregious, yet also places great emphasis on the authenticity of the GPT-4chan, which he considers to be "significantly better than GPT-3" in terms of replies and learning to write posts that are "indistinguishable" from those written by real people.

It seems that the AI is doing a good job of 'learning to be bad'.

GPT-3 is a large language model developed by AI research group OpenAI that uses deep learning to generate text and is hotly sought after in Silicon Valley and by the developer community.

Not only is the GPT-4chan to be taken out and stomped on, but the GPT-3's naming also follows the GPT-3, with some self-proclaimed "backwaters slapping the front waves on the beach.

▲ Image from: The Moon

But at least, the GPT-3 has a bottom line.

GPT-3 has been publicly available through the OpenAI API since June 2020 and requires a waiting list. One reason for not open-sourcing the entire model is that OpenAI can control how people use it through the API, with timely governance for abuse.

In November 2021, OpenAI removed the waiting list and developers in supported countries/regions can sign up and experiment immediately. OpenAI says, "Security advances that enable broader availability".

For example, OpenAI at the time introduced a content filter that detects generated text that may be sensitive or unsafe, sensitive meaning that the text deals with topics such as politics, religion, race, etc., and unsafe meaning that the text contains profanity, prejudice, or hate language.

OpenAI says that what they have done does not yet eliminate the 'toxicity' inherent in large language models - GPT-3 was trained on over 600GB of web text, some of which came from communities with gender, racial, physical and religious biases, which can amplify biases in training data.

Returning to GPT-4chan, Os Keyes, a PhD student at the University of Washington, argues that GPT-4chan is a tedious project that will bring no benefit.

Does it help us raise awareness of hate speech, or does it make us focus on the claptrap? We need to ask some meaningful questions. For example, for GPT-3 developers, how GPT-3 is (or is not) restricted in its use, and then for people like Yannic Kilcher, what should be his responsibility when deploying chatbots.

And Yannic Kilcher insists he's just a YouTuber who has different ethical rules than academics.

▲ Image from: CNBC

Without commenting on personal ethics, The Verge reporter James Vincent makes a thought-provoking point.

In 2016, a company's R&D department could be launching aggressive AI bots without proper oversight. in 2022, you won't need an R&D department at all.

It's worth noting that Yannic Kilcher isn't the only one studying 4Chan, but also cybercrime researcher Gianluca Stringhini at University College London, among others.

When confronted with Gianluca Stringhini's 'hate speech' research, 4chan users were calm, "Just one more meme for us".

The same is true today, when GPT-4chan retired from existence and the fake address it used, "Seychelles", became the new legend of 4chan.